|

With a deep passion for learning and knowledge, Chris is an avid enthusiast of mathematics, computer science, physics, philosophy of mind, and economics.

If you have any questions or comments about what you read on this website, feel free to email him at: NoSpam@ChristopherLind.Com (Replace NoSpam with Chris)

|

Article: What is information 4-10-2005

(Note: This article assumes you know what a logarithm is. If you do not know what

a logarithm is, click here)

What Is Information?

Say you are travelling on vacation and you get lost. What does it mean to get lost?

It means you are uncertain about the direction you are to go. Do you turn right

or left at the stop sign? You could simply guess which way to go, or you could stop and

ask for directions. If you guess, you have a 50% chance of being correct, or a probability

of 1/2.

(This diagram is called a TREE. You can see that you have exactly 2 choices at the intersection...

Turn Left or Turn Right. Only 1 of these 2 choices is the correct one, so you

have a probability of 1/2 of making the correct choice. To learn more about

trees and probability, click HERE.)

If you stop and ask someone if you should turn right or left, you will have aquired information.

Similarly, if you guess that you should turn left and you discover that you have guessed

incorrectly, you will have also aquired information (You now know that the correct direction

to take at the traffic light is a right hand turn).

So, in some way, information is related to uncertainty(or probability), and aquiring information

is to eliminate the uncertainty that you had(or ruling out possibilities).

If you stop and ask someone for instructions, and they tell you more than just turn

right or left at an intersection, but give you a complete, turn by turn set of instructions

through a series of 5 intersections, then you will have aquired even more information still.

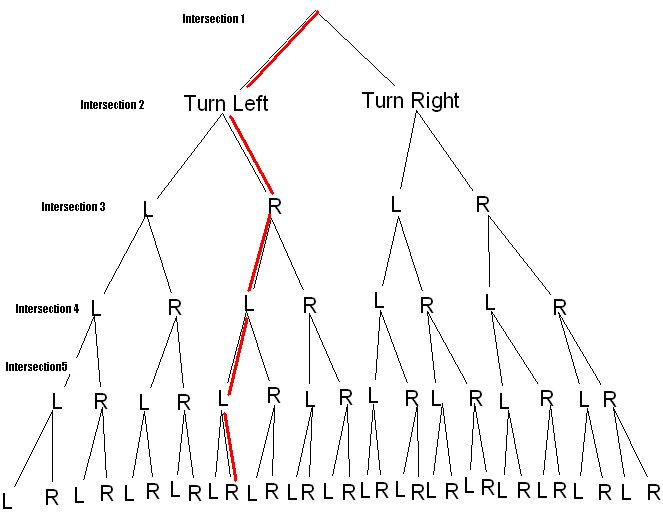

If at each of these intersections, you can only turn right or left, then there is a probability of 1/2 of

guessing the right direction to go at each intersection. This corresponds to (1/2)*(1/2)*(1/2)*(1/2)*(1/2) = 1/32.

There is a probability of only 1/32 of guessing your way correctly to the destination.

(Highlighted in red is one of the 32 possible choices you can make if you have to

guess your way through 5 intersections. The highlighed branch corresponds to

Turning Left at the first intersection, Right at the second intersection, Left at

the third, Left at the fourth, and Right at the fifth.)

This means that if you HAD to guess your way through 5 intersections, you would be only 3.1%

certain that you would arrive at your destination. On the other hand, you had a certainty of

50% that you would guess your way to your destination correctly if you only had to guess

at a single intersection, as in the first example.

So, the less certainty you have about an event or situation(such as how to get to where you need to go),

the more information you recieve when you get an answer. You aquired MORE information when you were given

turn by turn instructions through 5 intersections(corresponding to only 3.1% certaintly) as opposed

to when you only needed to know which way to turn at ONE intersection(corresponding to 50% certainty).

A question you may have at this point is, what does probability and uncertainty have to do with the

bits and bytes that is used to measure information content and storage inside of a computer? The answer

was discovered in the early part of the 20th century buy a man named Claude Shannon.

How to measure(quantify) information.

We live in the information age, and it is pretty common knowledge these days that computers are made up

of patterns of nothing but 1's and 0's. The smallest unit of information is called a bit. People

buy hard drives measure in terms of Giga Bytes, or billions of bits. Conceptually, a single bit can

be thought of as one light bulb.

When the light bulb is on, it represents the number 1.

When the light is off, it represents the number 0.

So a single bit can be a 1 or a 0.

Likewise, 1 quarter can also represent 1 bit of information...either a 0 or a 1.

For example,

heads can represent 1

and tails can represent 0

How does a quarter tie in with the example I gave at the beginning of this article about information

relating to uncertainty?? Here it is...

The mathematical relationship between the uncertainty or probability of an event occuring(which I will

label, P) and the information specified by the event(which I will call I) is :

I = -log2(P)

(Note: log stands for "logarithm". If you do not know

what a logarithm is, click here. Historically, logarithms were actually the FIRST

calculators!)

What does this mean?

I just said that a quarter contains 1 bit of information. Likewise, if you toss a quarter in the air, you have

a 50% chance of it landing heads or tails...or probability P=1/2

Plugging 1/2 or .50(50%) into the above formula yields

I = -log2(1/2)

I = -(-1) = 1 bit!

Matematically, an event of uncertainty of 50% corresponds to 1 bit! This is why the bit is the smallest unit

of information. It literally represents the simplest possible choice that you can make. You can either

choose option A or option B...you have no other choices, and if you were to remove either of the options

then you would have no choice or uncertainty at all, and hence no information!

What is the probability that tossing 3 quarters in the air will turn up heads each time, and how

much information is specified by this event?

There is a probability of 1/2 of a coin landing heads once, and

a probability of (1/2)*(1/2)*(1/2) = 1/8 of it landing heads 3 times in a row.

So P=1/8

and

I = log2(1/8)

I = - (-3) = 3 bits.

So 3 bits corresponds to 3 quarters!

This mathematical relationship between uncertainty and information was discovered by one of the pioneers of

computer science, Claude Shannon, and is called Shannon Information in his honor.

|

|